With nearby Silicon Valley driving the development of artificial intelligence, or AI, Uncanny Valley: Being Human in the Age of AI is the first major museum exhibition to reflect on the political and philosophical stakes of AI through the lens of artistic practice. This series highlights select artworks included in the exhibition.

An interactive installation by Lynn Hershman Leeson, Shadow Stalker, extends the artist’s career-long concern with technological surveillance and control into the algorithmic realm of machine learning. The work delivers a harsh critique of the techno-utopian belief in the benevolent efficacy of AI systems by articulating the problematic implications of employing them in the social sphere.

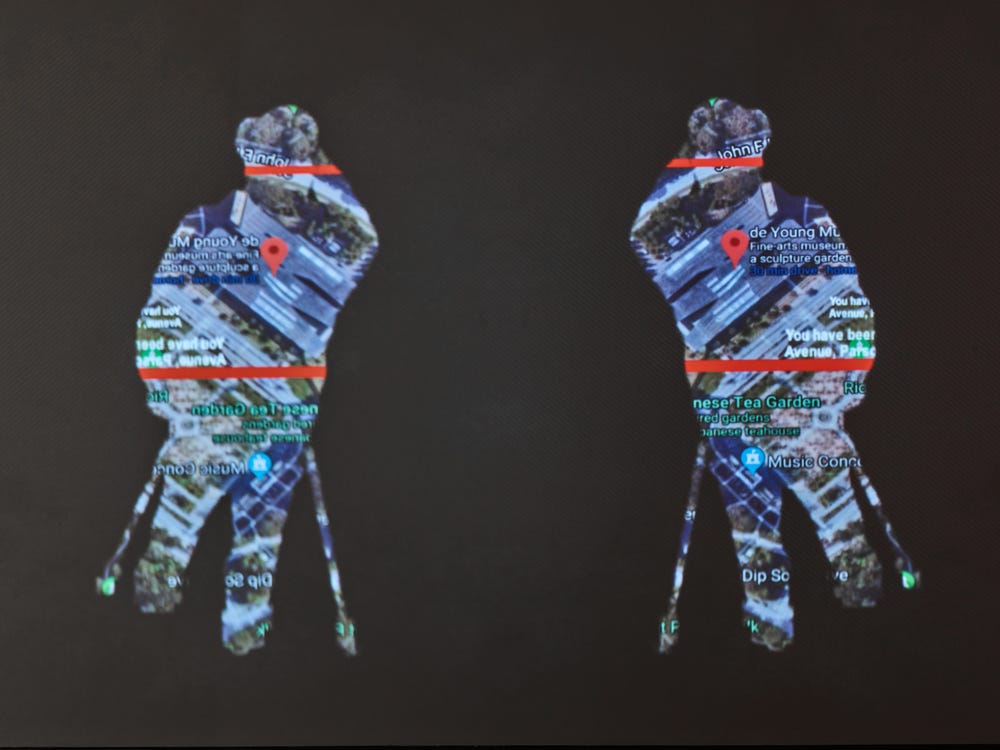

Lynn Hershman Leeson, Shadow Stalker, 2019. Installation view from Uncanny Valley: Being Human in the Age of AI.

Upon entering the installation, visitors are encouraged to share their email addresses. The input triggers an internet search, the results of which materialize within a body-shaped shadow projected onto the wall. The shadow becomes the visual container for the data; its form is activated by the visitor’s digital profile. Even digitally vigilant visitors might be confronted with information about themselves that they often don’t even remember having volunteered. Phone numbers, current and past addresses, professional histories, bank account details, and credit scores emerge, offering a chilling reminder of our unbridled (self-) exposure. By literally externalizing our digital footprint, Hershman Leeson points to our vulnerability in the online sphere. In the accompanying video, a figure whom Hershman Leeson calls the “Spirit of the Deep Web,” urges the viewer to fight an economic order in which personal data has become more valuable than oil, a phenomenon that scholar Shoshana Zuboff has defined as “surveillance capitalism.” Surveillance capitalism “is a new economic order that claims human experience as free raw material for hidden commercial practices of extraction, prediction, and sales,” for which our data trails have become the most valuable commodity. Hershman Leeson’s video highlights how digital users have become investors in their own market value. The video’s admonitions against complacency in the face of these issues are persistent: “Own your profile. Take hold of your avatar. Honor your shadow. Hold it tight. It contains your future and your past, and, like DNA, history refuses to evaporate.”

Lynn Hershman Leeson, Shadow Stalker, 2019. Installation view from Uncanny Valley: Being Human in the Age of AI.

Also featured in the video is the actress Tessa Thompson. Her narration centers on the history and current use of AI-based predictive policing systems—specifically, a program called PredPol. Adapted from Pentagon-funded research used to forecast battlefield casualties in Iraq, PredPol was developed in 2010 by scientists from the University of California, Los Angeles, together with the Los Angeles Police Department, to aid police forces in crime prevention. The program makes predictions based on datasets of recorded crimes dating back two to five years, which are updated daily with new data. High-risk areas are displayed on a web-based map in real time—enclosed within red boxes—encouraging heightened patrol of those communities.

Lynn Hershman Leeson, Video still from Shadowstalker, 2019. Dual channel video installation, 12 min. Lynn Hershman Leeson

Many civil rights organizations and research institutions have raised questions about the efficacy and fairness of these systems’ reliance on historical data. AI that is based primarily on arrests data carries a higher risk of bias since it is reflective more of police decisions than actual crimes. It is, in short, potentially dirty data. Critics also point to the dangers of a “feedback loop”—the results of the algorithms both reflecting and reinforcing attitudes about which neighborhoods are “bad” and which are “good.” Predictions tend to turn into self-fulfilling prophesies; if the findings raise the expectation of crimes in certain neighborhoods, police are more likely to patrol these areas and make arrests. Furthermore, the lack of transparency and public accountability that comes with such proprietary software prevents proper analysis and understanding of algorithmic recommendations. The artist and researcher Mimi Onuoha termed this “algorithmic violence”: invisible, automated decision-making processes that “affect the ways and degrees to which people are able to live their everyday lives” and that legitimatize hierarchy and inequality. Provoking viewers to recognize their (un)witting complicity in these systemic processes, Thompson’s narration concludes with a call to action: “The red square has also been a place of revolution. We decide which we will become: Prisoners or Revolutionaries. Democracy is fragile.”

The third part of the installation forces the viewer’s hand; a web portal based on the same algorithm as PredPol predicts the likelihood of white-collar crimes according to zip code. The implication is clear: data identity is shaped not by algorithms but by a multitude of social and political forces—both visible and hidden—that we must continue to battle. “Art,” Hershman Leeson once said, “is a form of encryption.” Shadow Stalker challenges viewers to take control of their rapidly forming digital identities, before it is too late.

Text by Claudia Schmuckli, Curator in Charge of Contemporary Art and Programming; from Beyond the Uncanny Valley: Being Human in the Age of AI, Fine Arts Museums of San Francisco. Available for purchase through the Museums Stores.

Learn more about Uncanny Valley at the de Young museum.

Further Reading

- Shoshana Zuboff, The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power (New York: Public Affairs, 2019).

- Rashida Richardson, Jason M. Schultz, and Kate Crawford, “Dirty Data, Bad Predictions: How Civil Rights Violations Impact Police Data, Predictive Policing Systems, and Justice, New York University Law Review 94, no. 192 (May 2019): 192–233, https://www.nyulawreview.org/wp-content/uploads/2019/04/NYULawReview-94-Richardson-Schultz-Crawford.pdf.

- Mimi Onuoha, “Notes on Algorithmic Violence,” GitHub, last updated February 22, 2018, https://github.com/MimiOnuoha/On-Algorithmic-Violence.

- “Art Is a Form of Encryption: Laura Poitras in Conversation with Lynn Hershman Leeson,” PEN America, August 23, 2016, https://pen.org/art-is-a-form-of-encryption-laura-poitras-in-conversation-with-lynn-hershman-leeson/.